Hi everyone, this is Esar from GeekFeed.

Amazon Web Services recently launched the new open source automated machine learning (AutoML) library called AutoGluon. It is easy-to-use and machine learning beginner friendly. Although AutoGluon has been around for almost a couple year, it is not very well known yet. This article will give you a brief introduction about this powerful tool and an easy guide to quickly get started with AutoGluon.

目次

Introduction

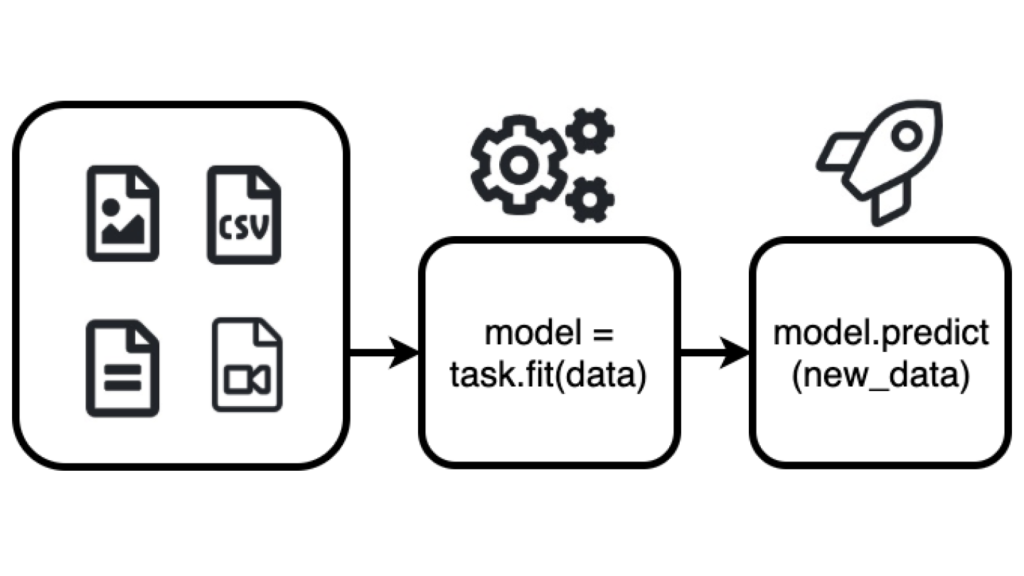

AutoGluon is a relatively new open source AutoML library launched by Amazon Web Services (AWS) that automates machine learning (ML) and deep learning (DL) involving text, image and tabular datasets.

Designed to be easy-to-use and easy-to-extend, AutoGluon is suitable for both machine learning beginners and experts. Experienced or new to ML, you can develop and enhance a state-of-the-art ML model using a few lines of Python code with AutoGluon.

Why use AutoGluon

Traditionally, achieving state-of-the-art ML performance required extensive background knowledge such as data preparation, missing data handling and selection of hyperparameters – as one of the most difficult tasks.

With AutoGluon the hyperparameters of each task are automatically selected using advanced tuning algorithms. you don’t have to have any familiarity with the underlying models, as all hyperparameters will be automatically tuned within default ranges that are known to perform well for the particular task and model.

Predicting Column in a Table with Tabular Prediction

AutoGluon offers several application such as Image Prediction, Object Detection, and Text Prediction.

In this article, we will demonstrate how to simply get started with AutoGluon using Tabular Prediction.

Installation

AutoGluon requires Python version 3.6, 3.7 or 3.8.

Linux and Mac are the only operating systems fully supported for now (Windows version might be available soon). If you are a Windows user, for a quick start with AutoGluon, we recommend using Jupyter Notebook or Google Colab.

|

1 2 3 4 |

python3 -m pip install -U pip python3 -m pip install -U setuptools wheel python3 -m pip install -U "mxnet<2.0.0" python3 -m pip install autogluon |

Import AutoGluon Classes and Load Train Dataset

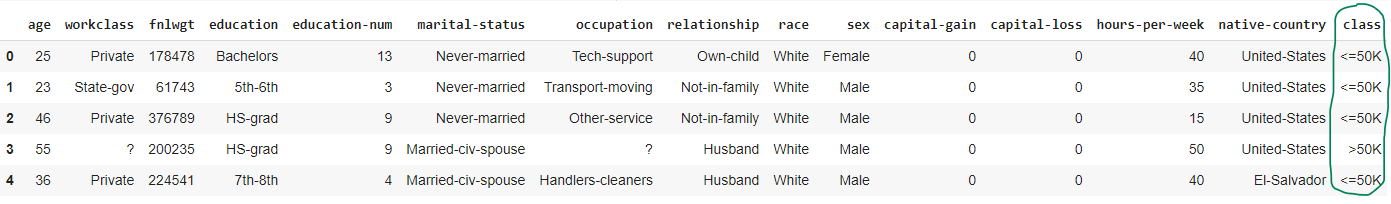

Using a sample dataset from AWS, let’s use AutoGluon to produce a classification model that predicts whether or not a person’s income exceeds $50,000.

First, import AutoGluon’s TabularPredictor and TabularDataset classes then load training data from a CSV file into AutoGluon Dataset object.

This time we load a sample dataset file provided by AutoGluon stored in AWS S3 bucket.

And let’s view 5 first rows of the dataset.

|

1 2 3 4 |

from autogluon.tabular import TabularDataset, TabularPredictor train_data = TabularDataset('https://autogluon.s3.amazonaws.com/datasets/Inc/train.csv') train_data.head() |

Note: this object is equivalent to a Pandas DataFrame and the same methods can be applied to both.

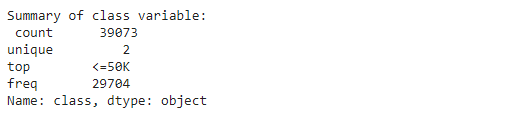

For simplicity, let’s specify the column’s name that we are about to train as label.

|

1 2 |

label = 'class' print("Summary of class variable: \n", train_data[label].describe()) |

Train Column

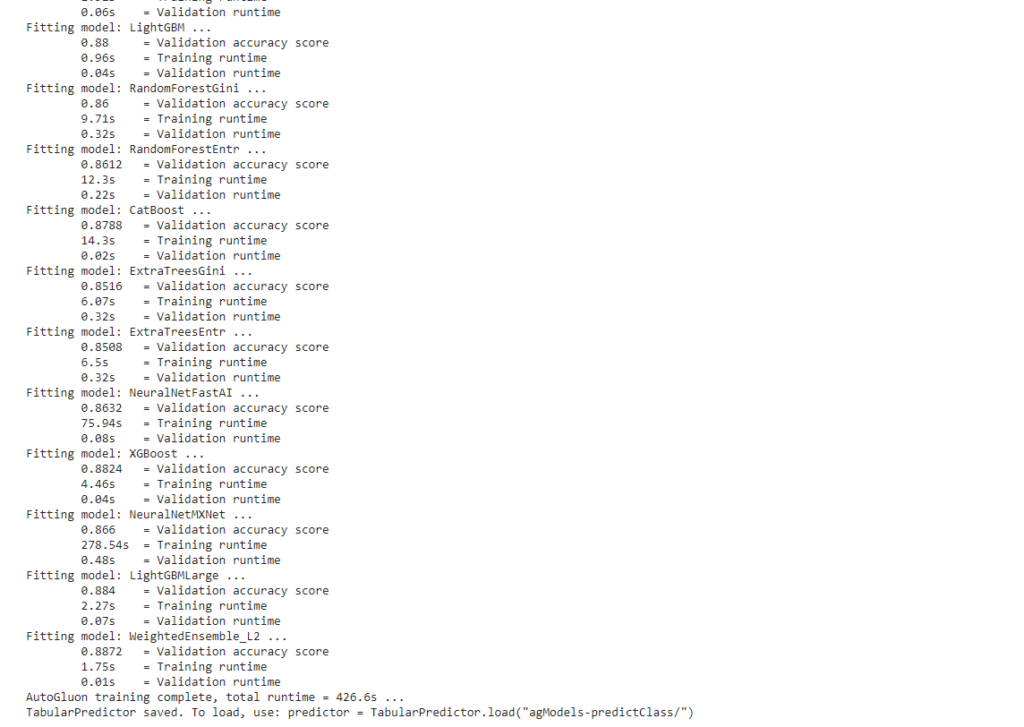

With fit() call, AutoGluon can produce highly-accurate models to predict the values in one column of a data table based on the rest of the columns’ values. Now let’s specify a folder to store trained models, then use AutoGluon fit() to train multiple models in train_data.

|

1 2 |

save_path = 'agModels-predictClass' # specifies folder to store trained models predictor = TabularPredictor(label=label, path=save_path).fit(train_data) |

Note: training time might vary depending on the dataset’s size and complexity.

Load Test Dataset

Next, load a separate test dataset for prediction.

Since we want to compare the original value and prediction value, we use the same dataset from AWS S3 bucket. But this time we drop/delete the class column to prove that AutoGluon is not cheating.

|

1 2 3 4 |

test_data = TabularDataset('https://autogluon.s3.amazonaws.com/datasets/Inc/test.csv') y_test = test_data[label] # values to predict test_data_nolab = test_data.drop(columns=[label]) # delete label column test_data_nolab.head() |

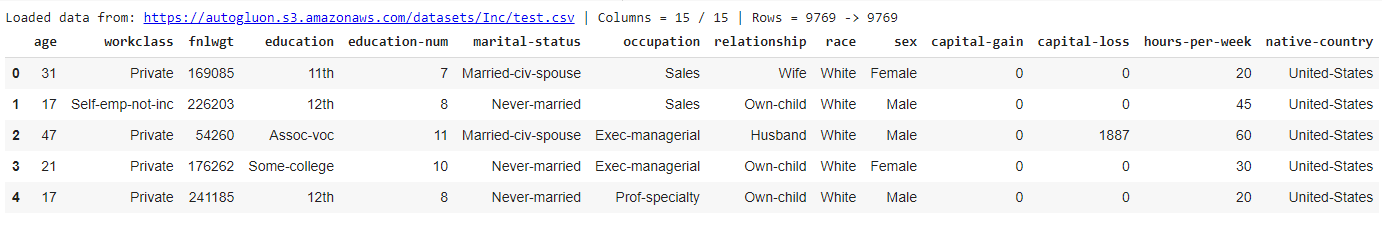

Now we should see the same dataset without the class column.

Predict Column

Use our trained model train_data to make predictions on the new dataset test_data , then evaluate them.

|

1 2 3 4 5 6 7 |

predictor = TabularPredictor.load(save_path) # optional y_pred = predictor.predict(test_data_nolab) # this will show prediction of 5 first and 5 last index print("Predictions: \n", y_pred) perf = predictor.evaluate_predictions(y_true=y_test, y_pred=y_pred, auxiliary_metrics=True) |

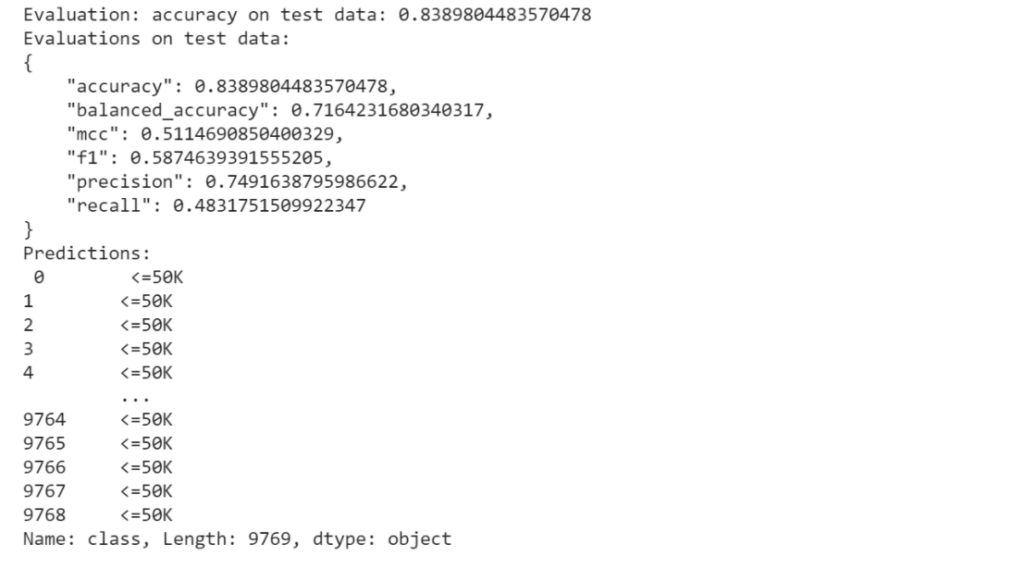

We can see the 5 first and last index prediction for class column and the evaluated accuracy is 0.8.

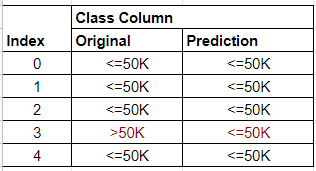

Just for fun we can also take 5 first index and compare the original data with the prediction result as below.

Predict Column Probability

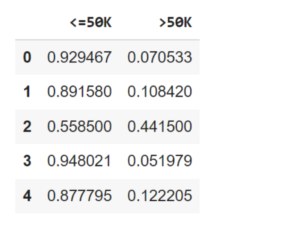

Instead of predicting the data, wan can output predicted probabilities of each row.

|

1 2 |

pred_probs = predictor.predict_proba(test_data_nolab) pred_probs.head(5) |

Regression (Predicting Numeric Table Columns)

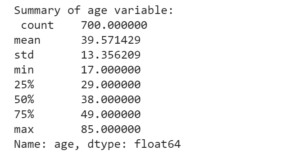

To demonstrate that fit() can also automatically handle regression tasks, we now try to predict the numeric age variable in the same table based on the other features:

|

1 2 |

age_column = 'age' print("Summary of age variable: \n", train_data[age_column].describe()) |

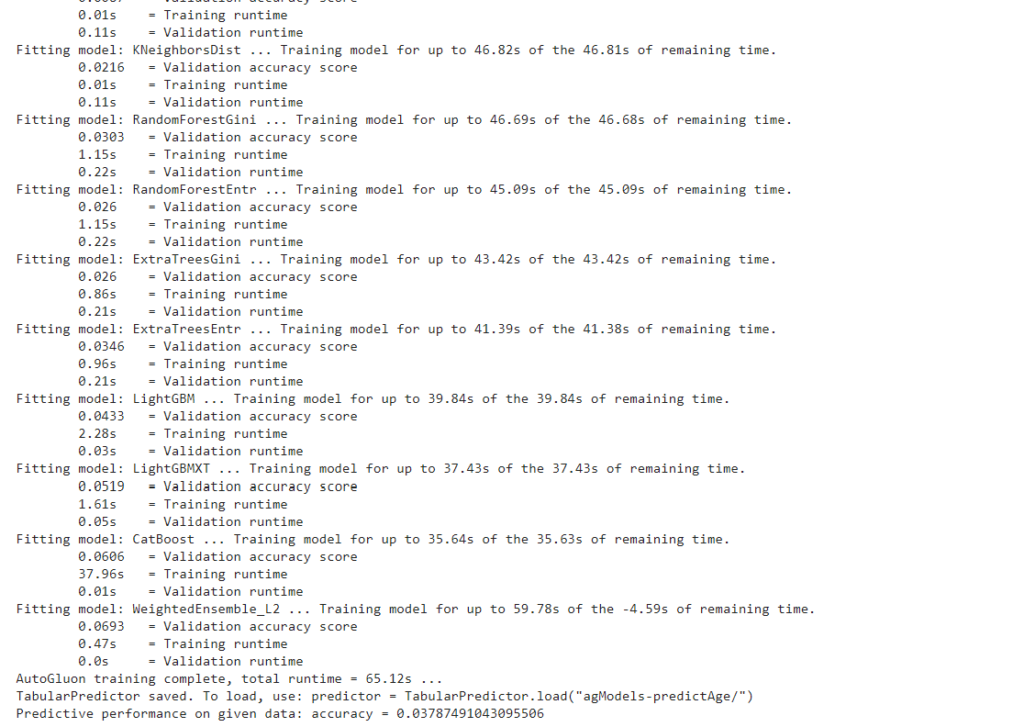

We again call fit(), imposing a time-limit this time (in seconds), and also demonstrate a shorthand method to evaluate the resulting model on the test data (which contain labels):

Note that we didn’t need to tell AutoGluon this is a regression problem, it automatically inferred this from the data and reported the appropriate performance metric (RMSE by default).

|

1 2 |

predictor_age = TabularPredictor(label=age_column, path="agModels-predictAge").fit(train_data, time_limit=60) performance = predictor_age.evaluate(test_data) |

That’s how quickly and easily we can use AutoGluon to predict column in a table using Tabular Prediction.

For more detail about Tabular Prediction and other AutoGluon applications, please refer to AutoGluon Official Documentation Website.

- 【React】フロントエンドのテストコードを書いてみよう【Vitest】 - 2024-04-30

- Simple AWS DeepRacer Reward Function Using Waypoints - 2023-12-19

- Restrict S3 Bucket Access from Specified Resource - 2023-12-16

- Expand Amazon EBS Volume on EC2 Instance without Downtime - 2023-09-28

- Monitor OpenSearch Status On EC2 with CloudWatch Alarm - 2023-07-02

【採用情報】一緒に働く仲間を募集しています